Delivering Value

Delivering value in small, consistent amounts is an important feature of working in a lean manner and enabling a good feedback loop to ensure you are delivering the most value possible. However, I was taught a lesson recently in thinking about my approach and not thinking one approach will fit every situation.

Continuous delivery

Continuous delivery is where you aim to always keep your software in a deployable state, have the ability to complete automated deployments easily, and release small value changes often rather than large infrequent deployments. I’m a huge believer in the benefits of continuous delivery and it’s something I always try and work towards whatever project I am working on.

Continuous delivery is a topic in itself so I’m not going to go in to too much detail here; Martin Fowler goes in to further detail here.

What is value?

Value can mean different things to different people.

Gaining value from releases can be in the form of increased conversion, learning more about your market, or performance improvements to name just a few.

These are all valuable outcomes of releasing software, however it is important to keep in mind the actual goal of the software. If you haven’t read The Goal you should definitely add it to your reading list. It warns of focusing on efficiencies in the wrong parts of your system. For example spending time making one part of your process super efficient when you have a bottle neck further down the production line is effort wasted. Similarly having a focus on the reduction in waste may seem like value added; however if that reduction in waste has a negative impact on your overall output it may actually be reducing value overall.

My lesson learned

For a while now I’ve been working mainly on web projects where continously delivering tiny iterations can work brilliantly. Recently however I’ve been working on some native app projects. My immediate reaction was to “ship all the thingz” but I was reminded that native apps are not the web. A release will not mean refreshing the browser for a user but having to install an update. Not many users would want to update an app several times a day.

This is what made me start thinking of how the value is generated. In this instance value is generated from users using the app. I was too focused on releasing the software quickly, because something is not done until it is live. However, in my push for continuous delivery I was in danger of forgetting about where the real value came from. I was trying to optimise the wrong part of the system.

Examples

After the revelation of getting a lots of tiny incremental updates out super fast isn’t alway sthe best approach I thought I would have a look at how often some of the apps I use release updates.

Below are the average, maximum, and minimum number of days between the last 10 deployments for Netflix, Spotify and Twitter.

| Days between releases | Netflix | Spotify | |

|---|---|---|---|

| Average | 3.5 | 4.4 | 6.5 |

| Max | 11 | 11 | 8 |

| Min | 1 | 1 | 3 |

The data for this was collected from APK Mirror.

These release times seem short and appear to contradict my eariler that delivering small incremental amounts doesn’t work too well when not working on a web project. These times are short and I’m sure there are many teams out there who would be proud to be averaging a release every 4 days.

However, when compared to some of Netflix’s deployment pipelines, 4 days is very long. For example, here it shows that in some instances for Netflix, it takes 16 minutes from code check in to live deployment.

Don’t get too stuck in your ways

This was a really welcome lesson of not getting too stuck in your ways and “this is the way things should be done”.

Every situation is different and you always need to be looking at how value is generated and how it can be maximised. So in my situation, I guess it’s important to find that balance between deploying quickly and efficiently while still making the end users happy by delivering updates that they will find valuable.

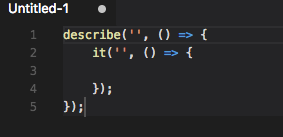

ES6 Mocha Snippets

ES6 Mocha Snippets is a great Visual Studio Code extension to help speed up writing your unit tests. Given how important unit testing is, anything that speeds it up good in my opinion.

Installation

Installation is super straightforward through either the extensions bar or Visual Studio Code quick open command.

Browsing and installing extensions is easy in Visual Studio Code. Just bring up the extensions bar then search for “ES6 Mocha Snippets” and hit install.

Alternatively if you already know what extension you want to install, use the quick open shortcut (⌘+P for Mac, Ctrl+P for Windows and Linux) then enter “ext install {name of snippet}”.

So for this extension it would be;

ext install es6-mocha-snippets

Usage

This extension provides a range of snippets for ES6 Mocha tests, such as for “describe”, “it” and “before”

To use, start typing the desired snippet, use the arrow keys to select the correct snippet, then tab or enter to complete the snippet.

A great extension to help speed up writing those all important tests! Check out the market place page for it here.

If you need a refresher, here is my introduction to Javascript unit testing using Mocha.

Crontab Basic Example

After having recently moved to a Mac from a Windows laptop, the loss of many features that I took for granted has been daunting. Losing the Task Scheduler is one such example. However, we have Crontab to the rescue.

Crontab

A cron job is just a command to run and the schedule that it should be run on.

Crontab is just a collection of those cron jobs. So it does appear we are losing a lot of functionality if you compare it to Task Scheduler, such as having triggers that are not based around time only. I thought the loss of such features and the GUI would be a hinderance to crontab, but so far it really hasn’t been. I don’t think I’ve ever made a scheduled task that wasn’t based on a timed schedule and the GUI is usually massively unresponsive, especially when remoting on to a server.

-l

To look at what cron jobs are currently set up you use the command;

crontab -l

This will list out all of the commands and their respective schedules.

-e

In order to edit the crontab use;

crontab -e

This will open the crontab file for editing; for me this opens in Vim by default.

To make a chang in Vim, press “I” to put it in “Insert” mode, make your changes then exit by first pressing escape to leave “Insert” mode followed by “:wq” to write and quit.

cron schedule

A cron schedule is made up from 5 time parts to set the schedule. Minute, hour, day of month, month, and day of week.

| * | * | * | * | * |

|---|---|---|---|---|

| minute | hour | day of month | month | day of week |

For example, to run a command at 2:30pm every day the schedule would be;

30 14 * * *

To have a command run every 10 minutes;

*/10 * * * *

To run a command at quarter past and quarter to every hour;

15,45 * * * *

crontab.guru is a great site where you can test out your schedule.

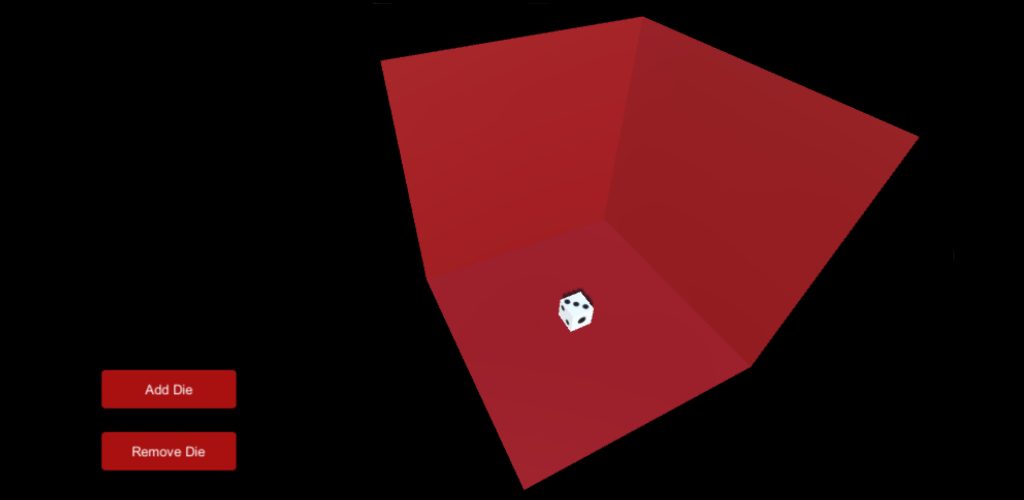

Unity Android Movement

I’ve been teaching myself Unity and wanted to make a phone app that used the movement from the phone.

It turns out that using the movement from your phone is pretty easy. Here’s how I did it in my first Unity app.

The code

Traditionally I’m a C# developer so naturally I picked to make my Unity scripts in C#, but you do have the option of choosing Javascript.

To get the movement from your phone, all you need to do is;

Vector3 movement = new Vector3(Input.acceleration.x, Input.acceleration.z, Input.acceleration.y);

I couldn’t believe how easy that was!

All we are doing here is setting a new Vector3 as our movement value with the movement the phone has made.

Z and Y axis

The Unity reference for Vector3 can be found here. Below is the constructor for a Vector3;

public Vector3(float x, float y, float z);

The keen eyed of you will notice that I have my Z and Y values the wrong way around.

By swapping the Z and Y, the phone at rest is flat rather than upright. After having a few people test my app, they

said that this felt much more natural.

FixedUpdate()

I put the code for the movement in FixedUpdate() over Update() so the calculation would be handled before each frame is rendered.

Rigid Body

To make the movement look more natural, I applied the movement as force rather than just moving the position of my object.

To do this, I added a rigid body component to my object.

rb = GetComponent<Rigidbody>();

The above will get the rigid body from your game object and this be assigned to a private variable. Then to apply the movement as force;

rb.AddForce(movement * speed);

The speed is just a private variable for the script to increase the value of the movement as needed

Change to dynamic collider

During testing my app, my objects would sometimes fly through the other box colliders in the scene. This had me scratching my head for a while. All my colliders looked correct and the box would only go through the wall intermittently.

It turns out that fast moving objects can sometimes pass though a collider. To get around this, you can the collision detection on the rigid body to continuous dynamic. While not as performant, sometimes it will be needed.

I got the answer to this puzzle from the Unity forum here.

My App!

If you want to see my code in action you can get my first Unity app in the Google Play store from here

Technical debt

You hear the term “technical debt” a lot in development. You also hear a lot of discussion about how to deal with it. Here I’m going to discuss why I don’t like the term.

What is technical debt

From my experience, “technical debt” is a term applied to any technical task that isn’t directly related to a new feature.

Examples of this could be, refactoring an old piece of code, scripting the creation of a site or putting a new type of automated tests in place.

Why I don’t like the term

I’m not saying technical tasks aren’t important. I hope they are or I’d be out of work.

The term “technical debt” hides the real reason that the technical task should be carried out. I see it as if someone asks why you are doing that task your response could be “you don’t need to worry about it, it’s technical”.

It implies that there isn’t value to be gained from doing that work, like paying a debt, you aren’t improving your balance just getting back to zero.

It’s a very rare situation that you have unlimited resources to polish and refine something. Decisions are made when “enough is enough”, and I feel that is a benefit of working in an agile environment. You get as much value as you can for the decided budget.

Focus on value

You need to ask yourself “why do I feel this task needs doing?”.

As with adding a new feature (I hope) you would set out your success criteria, the problem you are trying to solve and the value you hope to gain.

Lets take an old piece of code that could do with some refactoring as an example. When was this code last updated? Are you likely to need to be in this area of the solution anytime soon? If you haven’t touched the code for long time and it’s not throwing errors, why does it need refactoring? What’s the value to be gained here?

It’s also good to remember that there are different types of value. New features aren’t the only things that deliver value. Scripting the creation of your server for example is something that could easily be classified as technical debt. However, being able to recover your server quickly in the event of failure sounds pretty valuable to me.

Then what should we call it?

Perhaps the distinction between “features” and other work is part of the problem? Why does a piece of work need to be defined separately to another?

Work is work and you should be working on the most valuable thing you can. It shouldn’t matter if that work is adding a new feature or updating an old one.

How we should deal with technical issues

I would say it depends on what this “debt” is.

If it is indeed a piece or “work” then treat it as such. Examine the value you expect to get from it and prioritise it accordingly.

For any refactoring “debt” I really like Uncle Bob’s boy scout rule. Anyone who knows me, knows I’m a pretty big fan of Uncle Bob (if you’ve not heard of him, check him out here. Basically, you always leave the code in a better state than when you found it. By following this principle, the code base should be cleaned up relatively quickly while still delivering value.

Don’t get me wrong, refactoring is extremly important but I would be wary of refactoring with the goal of refactoring. If you are refactoring something so new functionality can be added or some other value gained, fantastic. If you are refactoring and don’t know the value that will come from it, why are you doing it?

tl;dr

There is no such thing as techincal debt.

There is however, more work to do. Treat it like you would any other piece of work. Understand the expected value and plan accordingly.