Cat Command

Here is another Linux command that I want to remember; the cat command.

My Linux command posts

These posts are NOT supposed to be exhaustive documentation for the commands they cover, but are here as a reminder for myself as I’ve found them useful and my memory is terrible.

The cat command

The cat (concatenate) command is pretty useful. It can be used make files, look at the contents of a file and combine files to make a new one.

Why I needed it

The task it came in useful for was to combine the logs from several days from several load balanced servers.

How I used it

cat file1.txt file2.txt > myNewFile.txt

Much easier than going in to each file, copying the contents, pasting in to a new file and remembering which files I had already done.

Here is the link to my other Linux command posts.

ASP.NET Core MVC

After setting up my first ASP.NET Core MVC site I’ve looked in to expanding it with a test project and taking advantage of more of the new features.

Test project

One of the first things I wanted to learn how to do in ASP.NET Core was how to get some unit testing going.

Firstly I set up the solution structure by creating a source directory and a seperate test directory. At the root of the solution I added a global.json file.

{

"projects": [

"src",

"test"

]

}

In the test directory, to create the test project, there is a very helpful dotnet command.

dotnet new -t xunittest

This is a very helpful option to the new command. In the project.json file it sets the testrunner to xunit and the needed dependencies.

I’ve not used xunit before, but apart from a few syntax differences to nunit, it seems pretty straightforward.

For a mocking framework I went with Moq. It’s not my first choice, but I read it worked with ASP.NET Core so I went for it. Below is the dependancy to add to the project.json;

"Moq": "4.6.38-alpha",

Once all the tests are in place, you just need to run the following command in the test project;

dotnet test

The docs for testing in ASP.NET Core can be found here.

It was a big relief to find that setting up unit testing was straightforward as I’m loving learning ASP.NET Core and I didn’t want it to be a blocker.

Tag helpers

While in ASP.NET Core you can use Razor as your view engine, there are still some updates that you can take advantage of. On my little test site I was trying to set up a form by using the html helper begin form;

@using (Html.BeginForm())

{

//form goodness goes here

}

However after reading through the ASP.NET Core docs I found the new tag helpers. I was sceptical at first as I’ve always found html helpers to be useful and didn’t particularly consider them needing replacing.

But now I’m a convert, tag helpers are pretty cool.

So my form became;

<form asp-controller="Home" asp-action="Index" method="post" id="usrform">

<!-- Input and Submit elements -->

<button type="submit">Get name</button>

</form>

No longer do you need to fill in the html helper’s signature, you just write the markup you need with the required attributes. For example to set the controller;

asp-controller="Home"

This makes the markup much nicer to read for everyone and removes that dependence on knowing how to use each helper. For example I would much rather read;

<label class="myClass" asp-for="MyProperty"></label>

Compared to;

@Html.Label("MyProperty", "My Property:", new {@class="myClass"})

I’m so glad I don’t have to remember that awful syntax for adding classes to html helpers.

_ViewImports.cshtml

In order to use tag helpers you need to make them available in a _ViewImports.cshtml file in the Views folder.

@addTagHelper "*, Microsoft.AspNetCore.Mvc.TagHelpers"

The above will add the tag helpers in the Microsoft.AspNetCore.Mvc.TagHelpers namespace. By following the same convention you can make custom tag helpers available as well.

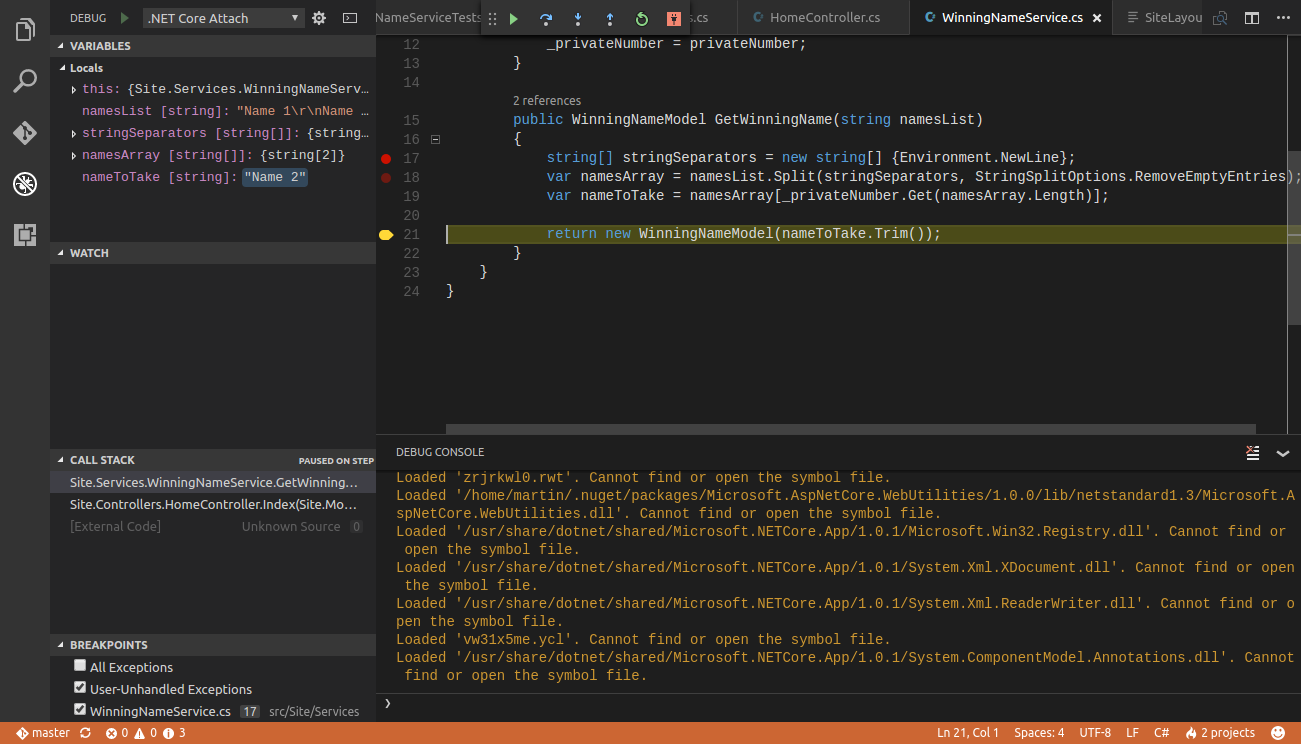

Debuging

While unit testing massively reduces the need to use the debugger, I’m only human.

For a free IDE, the debugging capabilities of VSCode are fantastic.

Rather than using the VSCode launch.json and the built in debug launcher (since it’s not a console application), attaching the debugger to the running process worked well.

By running “dotnet run” from the source directory of the application the mvc site will be up and running. Then from the debug panel choose the attach to process option.

As you can see in the screenshot, the debugger is pretty comparable to debugger in the full version Visual Studio. You can set break points, watch variables and step through.

Static files

The typical location for static assets in an ASP.NET Core MVC app is in a folder called wwwroot at the root of the project.

Then in the WebHostBuilder set the content root to be the current directory.

var host = new WebHostBuilder()

.UseKestrel()

.UseContentRoot(Directory.GetCurrentDirectory())

.UseStartup<Startup>()

.Build();

host.Run();

Static assets will then be available by using a relative path to the wwwroot folder. For example;

<link rel="stylesheet" href="~/css/bootstrap.css" >

Example site

My example site so far is on Github here

Grep Command

As Linux continues to blow my mind, I thought I better start getting down some of the commands that I’ve been finding useful before I forget them.

My Linux command posts

These posts are NOT supposed to be exhaustive documentation for the commands they cover, but are here as a reminder for myself as I’ve found them useful and my memory is terrible. If they happen to help someone else, then awesome, and if anyone wants to let me know of any more, even better.

The grep command

The grep command is used for searching text for regular expressions and returning the lines that match. The result can then be output to a new file

Why I needed it

Recently I was asked to get certain details out of a very large log file. My first thought was to open the log in Excel and start filtering away. But then a Linux loving colleague showed me the grep command.

How I used it

grep "the text I was searching for" mylogfile.log > newfile.txt

I couldn’t believe how easy and fast this made a boring task. Definitely a command I need to remember.

The Nature of Software Development book

Both a great introduction to agile development and a reminder of how to focus on what value is and how to get it.

Who the book is for

I really enjoyed the style of this book. There aren’t a lot of words on a page and each page has pictures. That might not make me sound the sharpest tool in the box, and maybe I’m not, but for someone who reads a couple of minutes here and there on a commute it helps a lot.

I’ve been working in agile environments for a few years now and I still found this book useful. It’s simple layout and message of “keep it simple” is a really nice reminder that if things are complicated, you are probably doing them wrong.

In the intro, Uncle Bob describes this book as a must for CTOs, directors of software and team leaders. While I think that is true, I think it would be better for a wider audience to read this book. It would be amazing if this book was read by the recipients of software. Agile development has a lot of benefits, but those benefits are hard to achieve if all parties aren’t bought in to the process.

What is a feature?

A lot of the book is centered around the importance of features.

We should plan by features, we should build by features, we should even grow our teams around features. Working towards features does have a lot of advantages. Planning is easier with smaller chunks, it is easier to pivot the project and while I think estimates are rarely helpful, it is easier to estimate smaller pieces of work.

However, I wonder if feature is the best term for what we should be breaking the work down in to? Would something more in keeping with the agile ideas of always being in a releasable state and getting value from releases like “deliverable” be more appropriate. Sometimes I find that the term “feature” is too easily interchangeable with “project”.

Once the term feature gets swapped with project, it makes realising agile benefits such as being able to work on the most valuable thing at the time difficult. As people often like the feeling of completeness that comes with finishing a project.

Bug free

In order to keep delivering value and enable flexibility the code has to be kept bug free.

One way Jeffries says to achieve this is to continually design the system as it grows. While I have always refactored code as I develop as part of the TDD cycle, this made me think that sometimes I need to take the brave choice to refactor the design of the system. From a business’s perspective this may not seem like the popular choice as speed is often seen as crucial, but keeping the system clean and flexible is important in the long term.

Another example of going slow to go fast is ensuring the system has good test coverage. Unit tests are an essential part of development, but acceptance tests can be massively helpful in enabling rapid releases of small features. By knowing that new code hasn’t broken existing features this can help to reduce manual testing and increase confidence in the system.

Up front planning

I find planning a very interesting topic in agile development.

Large detailed plans seem to make people feel comfortable and think that they are reducing risk. However, this rarely seems to be the case. Goals and measures of value are important and so is knowing the current direction to those goals. But a detailed long term plan is rarely going to be helpful, unless you can see the future. However it can also be damaging to the success of a project. It may stop you feeling like you have the flexibility to change direction.

Whip the ponies harder

There is an interesting chapter in the book dealing with the dangers of just putting more pressure on the team to get more out of them. This is extremely unlikely to work very well. Due to the extra pressure, mistakes will be made, best practices will slip and defects will be introduced in to the system. As previously mentioned, overall this will make the project take longer.

Jeffries goes on to say that analysing and removing any sources of delay for the team is likely to have a much larger impact. The same goes for helping the team to improve. This could be through training or helping them analyse how they are working for example.

I would have to say I whole heartedly agree with this point. Maybe I’ve just been very lucky in my career to have only met hard working developers but I have never worked with someone who I thought “if they worked harder this would go a lot faster”. Training and working to help resolve the team’s issues has always been a much better way to help increase the flow of work.

A very helpful book

I really enjoyed this book. It’s reminded me to take a step back everynow and again, slow down and think “am I doing this the best way?” when it comes to agile development.

It’s so easy to say “yes I know how you should work in an agile manner”, but it’s also very easy to get bogged down in processes and “agile” methods.

The Nature of Software Development has made me think more about why we work in a an agile way in the first place, that must be a good thing.

ASP.NET Core

I’ve heard a lot of good things about ASP.NET Core so I thought I’d check it out. It feels very different to the Microsoft development I’m used to, but after a bit of a learning curve I’m very impressed.

Why ASP.NET Core?

Rather than just bringing out a new version of ASP.NET with version 5, Microsoft decided to give us ASP.NET Core 1.0.

So far it seems pretty cool and addresses some of the issues with good old Microsoft development.

Microsoft can describe it better than I can; so here it is.

ASP.NET Core is a new open-source and cross-platform framework for building modern cloud based internet connected applications, such as web apps, IoT apps and mobile backends.

ASP.NET Core has a number of architectural changes that result in a much leaner and modular framework. ASP.NET Core is no longer based on System.Web.dll. It is based on a set of granular and well factored NuGet packages. This allows you to optimize your app to include just the NuGet packages you need. The benefits of a smaller app surface area include tighter security, reduced servicing, improved performance, and decreased costs in a pay-for-what-you-use model. According to Microsoft, there a lot benefits from moving to the new framework.

Some of the main benefits that made me want to try it out include;

- Lightweight, modular system

- Cross platform (yes, that’s right, it runs on Linux and Mac!)

- Use a text editor rather than Visual Studio

- Open source

Installing

As I’ve mentioned, one of the most exciting things about ASP.NET Core is that it is cross platform.

It can run on Windows, Mac, Docker and various Linux platforms. My mind was blown when I ran my first C# application on Linux!

Download and install instructions for your chosen platform are available here.

I purposefully avoided the Windows installation with Visual Studio as I was keen to try it with just a text editor. Visual Studio is great and I love it, but I do find it can feel slow and clunky, especially on older laptops.

Basic set up

After installation you can have a .NET application running in just 5 lines.

mkdir hwapp

cd hwapp

dotnet new

dotnet restore

dotnet run

MVC

With ASP.NET Core being pretty different from what I’m used to I wanted to hold on to something I knew, so I set out to build an MVC site with it.

Overall, things went pretty smoothly but there were definitely some head banging moments. Mainly these were caused by the documentation being so bad. Maybe I just need to get my head around Core more first but I didn’t find them very useful at all. They spend a lot of time explaining the concepts and patterns behind MVC itself rather than how to set up a site in Core.

The tutorials weren’t a great deal of help either as they wanted you to use the Visual Studio templates. Something I was avoiding as I wanted to get it working with the minimal amount of boilerplate/templating I could.

Controller

After running the initializer, a controller was my first step.

So I added my folder, added my controller class, used the new Microsoft.AspNetCore.Mvc reference and unsurprisingly it didn’t work. Rather than a nice tutorial to use I had to bounce about the docs for a while.

A couple of things are needed to get the controller to work.

Startup.cs

In an ASP.NET Core program you can use an optional startup configuration file.

Along with the Configuration method required in a startup class, you also can have an optional ConfigureServices method. This takes an IServiceCollection, and its on here that you need to enable MVC by calling AddMvc().

It’s also in the startup class that you can define your routes for your MVC site. I decided to go for this option;

app.UseMvc(routes =>

{

routes.MapRoute("default", "{controller=Home}/{action=Index}/{id?}");

});

but you also have the option of using attribute based routing;

[Route("Home/Index")]

public IActionResult Index()

{

return View();

}

View

Views work in the same as before so things like view routing etc are as before.

However there area couple of dependencies that need to be added in first. In your project.json add in;

"Microsoft.AspNetCore.Mvc.Razor": "1.0.1",

"Microsoft.AspNetCore.StaticFiles": "1.0.0"

Then in your Program.cs when you set up your host, you will need to tell your application to use the content root so view routing will work properly.

var host = new WebHostBuilder()

.UseKestrel()

.UseContentRoot(Directory.GetCurrentDirectory())

.UseStartup<Startup>()

.Build();

Also, just as with enabling MVC you need to enable using static files in Startup.Configure;

app.UseStaticFiles();

There was one final head scratcher that I was struggling with before I could get Razor views to work. In you project.json, if you have run “dotnet new” you should have a section called “buildOptions”. In this section you will need to add “preserveCompilationContext” as below;

"buildOptions": {

"preserveCompilationContext": true,

"debugType": "portable",

"emitEntryPoint": true

},

Model

One of the nice features of ASP.NET Core is that it has been designed with dependency injection in mind. I’ve not really tried many of the features yet but it was incredibly easy to setup.

We need another dependency in project.json;

"Microsoft.Extensions.DependencyInjection": "1.0.0",

Then in the ConfigureServices method in the Startup class you can register your dependencies;

services.AddTransient<IGetHomeModels, HomeModelService>();

Logging

I found the logging feature very useful. Without I found debugging very difficult. As with the dependency injection this was pretty easy to setup.

Another package reference;

"Microsoft.Extensions.Logging.Console": "1.0.0"

and enable logging in the Configure method;

loggerFactory.AddConsole(LogLevel.Error);

Visual Studio Code IDE

I used Visual Studio Code to set up my MVC site and it really surprised me with how good it works as a C# IDE. I was worried that without Resharper it was going to feel like one finger typing.

However, even from this tiny project I was able to use several shortcuts within VSCode.

VSCode was able to “implement interface” on a class, just like in full Visual Studio.

Code snippets are also available, such as, “ctor” to create a constructor within the current class.

Another feature I was surprised to see was the ability to rename easily. Just hit F2 and the rest is taken care of.

With me still getting use to the package system in ASP.NET Core, another useful feature is the “remove unused usings”

With all these IDE features, and I’m sure there will be loads more I just havn’t seen yet, Visual Studio Code is shaping up to be a pretty competent C# IDE.

Next steps

The next couple of steps for me in ASP.NET Core are adding a test project and getting it deployed somewhere.

If you want to fork my base MVC site, here it is.